One magical question – the so-called ultimate question – and one simple formula – the well-known Net Promoter Score – are the ultimate measures of customer satisfaction and the ultimate predictors of a company’s future success.

These are the assertions in the book The Ultimate Question by Fred Reichheld, a Bain & Company consultant. The same assertions are repeated and expanded upon in a following book, The Ultimate Question 2.0, by Reichheld and Rob Markey, also a Bain consultant. They argue that the ultimate question’ and NPS drive extraordinary financial and competitive results.

Many chief executives have read these books, heard them discussed at conferences, or listened in on comments about them from other senior executives. The books, the publicity, the conferences, and the favorable press have elevated NPS to almost mythical status, the holy grail of business success. But is there really one ultimate question? Is NPS really the ultimate predictor of success?

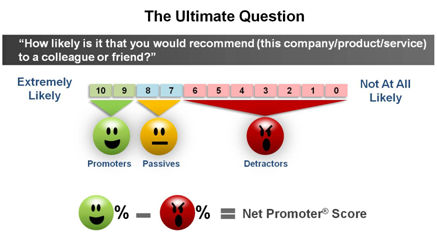

The ultimate question is: How likely is it that you would recommend this product, service, company, etc. to a colleague or friend? The answer scale is 10-to-zero, with 10 defined as extremely likely to recommend, and 0 is defined as not at all likely to recommend.

The NPS is calculated from the answers to the 10-to- zero scale. Those with 10 and nine ratings are grouped together and called promoters. Respondents with eight and seven ratings are called passives, and those who give a rating of six or below are called detractors. The NPS formula is the percent classified as promoters minus the percent classified as detractors.

Here are some observations about the ultimate question and NPS. Let’s start with the positives, and then move to the negatives.

Positives

The question itself is a good one. It’s clear and easy to understand.

The 10-to- zero rating scale is widely used and generally accepted as a sensitive scale (i.e., it can accurately measure small differences from person to person).

The labeling of the scale’s endpoints (very likely = 10 and not at all likely = zero) are clear and understandable.

Negatives

However, there is ambiguity.

The individual numbers on the 10-to- zero answer scale (except for the endpoints) are not labeled or defined. That leaves us wondering what someone’s answer really means. Is a seven or eight rating positive, neutral, or negative? Some people tend to give high ratings, while others tend to give low ratings, especially when most of the points on the scale are not precisely defined.

The NPS formula also is imprecise because of lost information. Here’s how NPS loses information:

• The NPS counts a 10 answer and a nine answer as equal. Isn’t a 10 better than a nine? This information (that a 10 is better than a nine) is lost in the formula.

• If someone answers an eight or a seven, the answer simply doesn’t count; it’s not included in the formula. So all of the information in an eight or a seven answer is lost, and the sample size is reduced because these individuals are not counted (a smaller sample size increases statistical error).

• The NPS counts a six answer the same as a zero answer, a five answer the same as a zero answer, a four answer the same as a zero answer, and so on. Isn’t a six answer much better than a zero answer? Isn’t a five answer better than zero answer? So most of the information in answers six, five, four, three, two, and one is lost in the NPS formula, because it counts all of these six-to-zero ratings as equal to zero.

• In effect, the NPS converts a very sensitive 10-to-zero answer scale into a crude two-point scale (promoters and detractors) that loses much of the information contained in the original answers.

A far better measure than the NPS is a simple average of the answers to the 10-to-0 scale, where a 10 answer counts as a 10, a nine answer counts as a nine, an eight answer counts as an eight, and so on down to zero. This results in an average score somewhere between zero and 10 that contains all of the information in the original answer scale, and no information is lost.

The NPS also involves misnomers. The terms promoters, passives, and detractors are curious. If people answer with a 10 or a nine rating, it would seem defensible to classify them as promoters (i.e., people highly likely to recommend your brand or company). Calling those with eight or seven rating passives is highly questionable. An eight or seven rating is pretty darn good, and one might conclude that the individuals who give those ratings are also likely to recommend your brand. So the passive name is a misnomer.

The real sin, however, is using the term detractor in this context. Nowhere on the answer scale is there a place to record that someone is likely to recommend that people not buy your brand. That end of the scale says not at all likely to recommend. Not likely to recommend is a far cry from being a detractor (i.e., someone who actively tells friends not to buy your brand or someone who makes negative remarks about your company).

Let’s further explore the recommendation metric. The likelihood that someone will recommend a brand or company varies tremendously from product category to product category.

Someone may recommend a car dealership, or a restaurant, or a golf course (high-interest categories) but not mention a drugstore, gas station, bank, or funeral home (low-interest categories). If customer recommendations are not a major factor in your product category, then the NPS might not be a worthwhile measure for your brand.

A sound strategy is to tailor the customer experience questions to your product or service and to your business goals. Use multiple questions that measure customer experience relevant to your company. Don’t buy into the illusion of universal truth or the promise of an ultimate question. Don’t fall for simple answers to complex questions.

So, if the ultimate question is not really the ultimate question, then what are some best practices to create better questions to measure customer satisfaction?

The first rule is to do no harm. Your attempts to measure customer satisfaction should not lower your customers’ satisfaction.

This means that questionnaires should be simple, concise, and relevant. Use very simple rating scales (yes/no; very good/somewhat good/not good; excellent/good/ fair/poor). Such short word-defined scales are easy for customers to answer, and the results are easy to explain to executives and employees. Moreover, short, simple scales work well on PCs, tablet computers, and smartphones.

Questionnaires also should almost always begin with an open-ended question, to give customers a chance to tell their stories. A good opening question might be: Please tell us about your recent experience of buying a new Lexus from our dealer in north Denver.

This open-ended prompt gives the customer the opportunity to explain and complain; it communicates that you are really interested in the customer’s experiences; it conveys that your company is really listening. Then you can ask rating questions about various aspects of the customer’s experience, but keep these few in number.

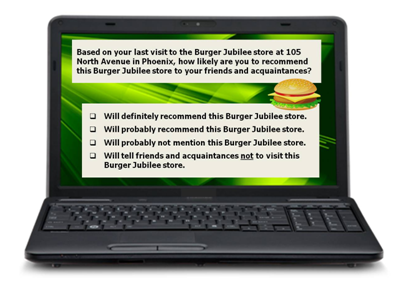

Most satisfaction questionnaires are much too long. If you want to include a recommendation question, you might consider something similar to the following (this is a restaurant example, so remember that the exact wording must be tailored to your product, company and situation):

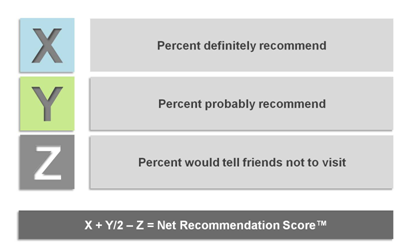

With this question and answer scale, it’s possible to calculate a net recommendation score according to the following formula:

The recommendation question with its well-defined answer choices and the net recommendation score formula give you a much more precise measure of the net influence of customer recommendations than the ultimate question and the NPS.

Jerry W. Thomas is president and chief executive of Decision Analyst Inc. (www.decisionanalyst.com).

Edited by Dominick Sorrentino